Node JS Platform uses “Single Threaded Event Loop” architecture to handle multiple concurrent clients. Then how it really handles concurrent client requests without using multiple threads. What is Event Loop model? We will discuss these concepts one by one.

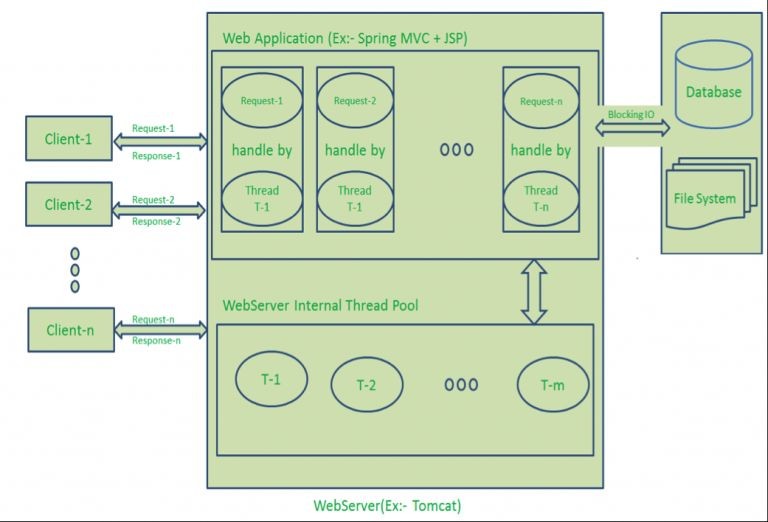

Traditional Web Application Processing Model

Any Web Application developed without Node JS, typically follows “Multi-Threaded Request-Response” model. Simply we can call this model as Request/Response Model.

Client sends request to the server, then server do some processing based on clients request, prepare response and send it back to the client.

This model uses HTTP protocol. As HTTP is a Stateless Protocol, this Request/Response model is also Stateless Model. So we can call this as Request/Response Stateless Model.

However, this model uses Multiple Threads to handle concurrent client requests. Before discussing this model internals, first go through the diagram below.

Request/Response Model Processing Steps:

- Clients Send request to Web Server.

- Web Server internally maintains a limited thread pool to provide services to the client requests.

- Web Server is in infinite loop and waiting for client incoming requests

- Web Server receives those requests.

- Web Server pickup one client request

- Pickup one thread from thread pool

- Assign this thread to client request

- This thread will take care of reading client request, processing client request, performing any blocking IO operations (if required) and preparing response

- This thread sends prepared response back to the web server

- Web server in-turn sends this response to the respective client.

Server waits in Infinite loop and performs all sub-steps as mentioned above for all n clients. That means this model creates one Thread per Client request.

If more clients requests require Blocking IO Operations, then almost all threads are busy in preparing their responses. Then remaining clients Requests should wait for longer time.

As in the above diagram, “n” number of clients send request to web server if they are accessing web application concurrently and are Client-1, Client-2… and Client-n. Web Server internally maintains a limited thread pool which if assumed as “m” number of threads in thread pool. Web Server receives those requests one by one.

- Web Server pickup Client-1 Request-1, Pickup one Thread T-1 from Thread pool and assign this request to Thread T-1

- Thread T-1 reads Client-1 Request-1 and process it

- Client-1 Request-1 does not require any Blocking IO Operations

- Thread T-1 does necessary steps and prepares Response-1 and send it back to the Server

- Web Server in-turn send this Response-1 to the Client-1

- Web Server pickup another Client-2 Request-2, Pickup one Thread T-2 from Thread pool and assign this request to Thread T-2

- Thread T-2 reads Client-1 Request-2 and process it

- Client-1 Request-2 does not require any Blocking IO Operations

- Thread T-2 does necessary steps and prepares Response-2 and send it back to the Server

- Web Server in-turn send this Response-2 to the Client-2

- Web Server pickup another Client-n Request-n, Pickup one Thread T-n from Thread pool and assign this request to Thread T-n

- Thread T-n reads Client-n Request-n and process it

- Client-n Request-n require heavy Blocking IO and computation Operations

- Thread T-n takes more time to interact with external systems, does necessary steps and prepares Response-n and send it back to the Server

- Web Server in-turn send this Response-n to the Client-n

If “n” is greater than “m” (Most of the times, its true), then server assigns threads to client requests up to available threads. After all m Threads are utilized, then remaining Client’s Request should wait in the Queue until some of the busy Threads finish their Request-Processing Job and free to pick up next Request.

If those threads are busy with Blocking IO Tasks (For example, interacting with Database, file system, JMS Queue, external services etc.) for longer time, then remaining clients should wait longer time.

- Once Threads are free in Thread Pool and available for next tasks, Server pickup those threads and assign them to remaining Client Requests.

- Each Thread utilizes many resources like memory etc. So before going those Threads from busy state to waiting state, they should release all acquired resources.

Drawbacks of Request/Response Stateless Model:

- Handling more and more concurrent client’s request is bit tough.

- When Concurrent client requests increases, then it should use more and more threads, finally they eat up more memory.

- Sometimes, Client’s Request should wait for available threads to process their requests.

- Wastes time in processing Blocking IO Tasks.

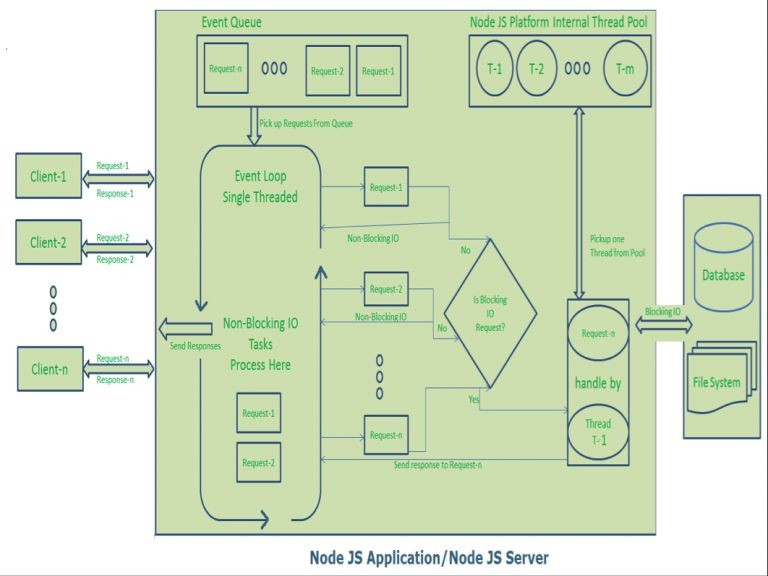

Node JS – Single Threaded Event Loop

Node JS Platform does not follow Request/Response Multi-Threaded Stateless Model. It follows Single Threaded with Event Loop Model. Node JS Processing model mainly based on Javascript Event based model with Javascript callback mechanism.

As Node JS follows this architecture, it can handle more and more concurrent client requests very easily. Before discussing this model internals, first go through the diagram below.

The main heart of Node JS Processing model is “Event Loop”. If we understand this, then it is very easy to understand the Node JS Internals.

Single Threaded Event Loop Model Processing Steps:

- Clients Send request to Web Server.

- Node JS Web Server internally maintains a Limited Thread pool to provide services to the Client Requests.

- Node JS Web Server receives those requests and places them into a Queue. It is known as “Event Queue”.

- Node JS Web Server internally has a Component, known as “Event Loop”. Why it got this name is that it uses indefinite loop to receive requests and process them.

- Event Loop uses Single Thread only. It is main heart of Node JS Platform Processing Model.

- Even Loop checks any Client Request is placed in Event Queue. If no, then wait for incoming requests for indefinitely.

- If yes, then pick up one Client Request from Event Queue

- Starts process that Client Request

- If that Client Request Does Not requires any Blocking IO Operations, then process everything, prepare response and send it back to client.

- If that Client Request requires some Blocking IO Operations like interacting with Database, File System, External Services then it will follow different approach

- Checks Threads availability from Internal Thread Pool

- Picks up one Thread and assign this Client Request to that thread.

- That Thread is responsible for taking that request, process it, perform Blocking IO operations, prepare response and send it back to the Event Loop

- Event Loop in turn, sends that Response to the respective Client.

As in the above diagram, “n” number of clients send request to web server if they are accessing web application concurrently and are Client-1, Client-2… and Client-n. Web server internally maintains a limited thread pool which if assumed as “m” number of threads in thread pool. Node JS Web Server receives Client-1, Client-2… and Client-n Requests and places them in the Event Queue. Node JS Even Loop Picks up those requests one by one.

- Even Loop pickups Client-1 Request-1

- Checks whether Client-1 Request-1 does require any Blocking IO Operations or takes more time for complex computation tasks.

- As this request is simple computation and Non-Blocking IO task, it does not require separate Thread to process it.

- Event Loop process all steps provided in that Client-1 Request-1 Operation (Here Operations means Java Script’s functions) and prepares Response-1

- Event Loop sends Response-1 to Client-1

- Even Loop pickups Client-2 Request-2

- Checks whether Client-2 Request-2does require any Blocking IO Operations or takes more time for complex computation tasks.

- As this request is simple computation and Non-Blocking IO task, it does not require separate Thread to process it.

- Event Loop process all steps provided in that Client-2 Request-2 Operation and prepares Response-2

- Event Loop sends Response-2 to Client-2

- Even Loop pickups Client-n Request-n

- Checks whether Client-n Request-n does require any Blocking IO Operations or takes more time for complex computation tasks.

- As this request is very complex computation or Blocking IO task, Even Loop does not process this request.

- Event Loop picks up Thread T-1 from Internal Thread pool and assigns this Client-n Request-n to Thread T-1

- Thread T-1 reads and process Request-n, perform necessary Blocking IO or Computation task, and finally prepares Response-n

- Thread T-1 sends this Response-n to Event Loop

- Event Loop in turn, sends this Response-n to Client-n

Here Client Request is a call to one or more Java Script Functions. Java Script Functions may call other functions or may utilize its Callback functions nature.

Node JS – Single Threaded Event Loop Advantages

- Handling more and more concurrent client’s request is very easy.

- Even though our Node JS Application receives more and more Concurrent client requests, there is no need of creating more and more threads, because of Event loop.

- Node JS application uses less Threads so that it can utilize only less resources or memory

Blocking vs Non-Blocking

This overview covers the difference between blocking and non-blocking calls in Node.js. This overview will refer to the event loop and libuv but no prior knowledge of those topics is required. Readers are assumed to have a basic understanding of the JavaScript language and Node.js callback pattern. “I/O” refers primarily to interaction with the system’s disk and network supported by libuv.

Blocking – Blocking is when the execution of additional JavaScript in the Node.js process must wait until a non-JavaScript operation completes. This happens because the event loop is unable to continue running JavaScript while a blocking operation is occurring.

In Node.js, JavaScript that exhibits poor performance due to being CPU intensive rather than waiting on a non-JavaScript operation, such as I/O, isn’t typically referred to as blocking. Synchronous methods in the Node.js standard library that use libuv are the most commonly used blocking operations. Native modules may also have blocking methods.

All of the I/O methods in the Node.js standard library provide asynchronous versions, which are non-blocking, and accept callback functions. Some methods also have blocking counterparts, which have names that end with Sync.

Comparing Code – Blocking methods execute synchronously and non-blocking methods execute asynchronously. Using the File System module as an example, this is a synchronous file read:

const fs = require(‘fs’);

const data = fs.readFileSync(‘/file.md’); // blocks here until file is read

And here is an equivalent asynchronous example:

const fs = require(‘fs’);

fs.readFile(‘/file.md’, (err, data) => {

if (err) throw err;

});

The first example appears simpler than the second but has the disadvantage of the second line blocking the execution of any additional JavaScript until the entire file is read. Note that in the synchronous version if an error is thrown it will need to be caught or the process will crash. In the asynchronous version, it is up to the author to decide whether an error should throw as shown.

Let’s expand our example a little bit:

const fs = require(‘fs’);

const data = fs.readFileSync(‘/file.md’); // blocks here until file is read

console.log(data);

// moreWork(); will run after console.log

And here is a similar, but not equivalent asynchronous example:

const fs = require(‘fs’);

fs.readFile(‘/file.md’, (err, data) => {

if (err) throw err;

console.log(data);

});

// moreWork(); will run before console.log

In the first example above, console.log will be called before moreWork(). In the second example fs.readFile() is non-blocking so JavaScript execution can continue and moreWork() will be called first. The ability to run moreWork() without waiting for the file read to complete is a key design choice that allows for higher throughput.

Concurrency and Throughput – JavaScript execution in Node.js is single threaded, so concurrency refers to the event loop’s capacity to execute JavaScript callback functions after completing other work. Any code that is expected to run in a concurrent manner must allow the event loop to continue running as non-JavaScript operations, like I/O, are occurring.

As an example, let’s consider a case where each request to a web server takes 50ms to complete and 45ms of that 50ms is database I/O that can be done asynchronously. Choosing non-blocking asynchronous operations frees up that 45ms per request to handle other requests. This is a significant difference in capacity just by choosing to use non-blocking methods instead of blocking methods.

The event loop is different than models in many other languages where additional threads may be created to handle concurrent work.

Dangers of Mixing Blocking and Non-Blocking Code – There are some patterns that should be avoided when dealing with I/O. Let’s look at an example:

const fs = require(‘fs’);

fs.readFile(‘/file.md’, (err, data) => {

if (err) throw err;

console.log(data);

});

fs.unlinkSync(‘/file.md’);

In the above example, fs.unlinkSync() is likely to be run before fs.readFile(), which would delete file.md before it is actually read. A better way to write this, which is completely non-blocking and guaranteed to execute in the correct order is:

const fs = require(‘fs’);

fs.readFile(‘/file.md’, (readFileErr, data) => {

if (readFileErr) throw readFileErr;

console.log(data);

fs.unlink(‘/file.md’, (unlinkErr) => {

if (unlinkErr) throw unlinkErr;

});

});

The above places a non-blocking call to fs.unlink() within the callback of fs.readFile() which guarantees the correct order of operations.