A MapReduce program is composed of four program components

- Mapper

- Partitioner

- Combiner

- Reducer

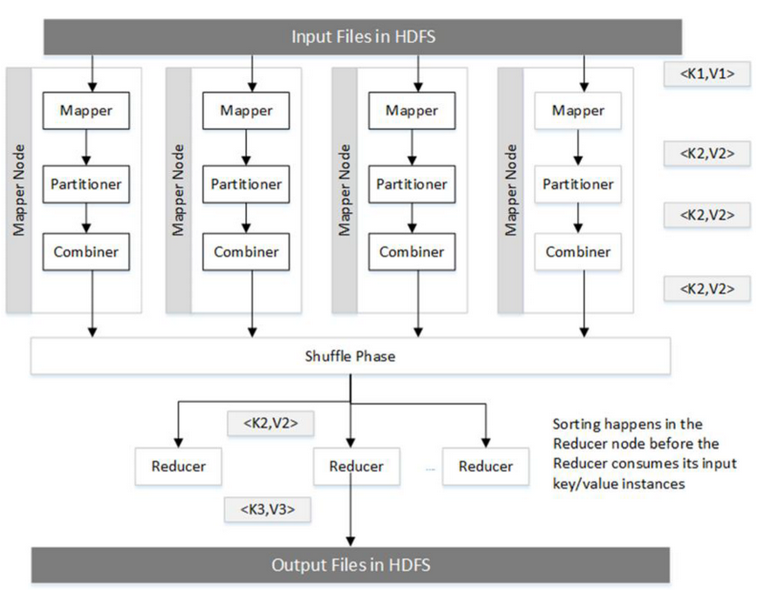

These components execute in a distributed environment in multiple JVM’s. The two JVMs important to a MapReduce developer are the JVM which executes a Mapper and that which executes the Reducer instance. Every component you develop will execute in one of these JVMs. Unless you have turned on JVM reuse, which is not recommended, each Mapper and Reducer instance will execute in its own JVM. The diagram below shows the various components and the nodes in which they execute.

The Mapper, Partitioner and the Combiner all execute on the Mapper node in a single JVM designated for the Mapper. This has many implications. Static variables set in one component can be accessed by other components. If you like using the Spring API, you can exchange messages by configuring a set of objects using dependency injection. As we will discuss soon, these components will execute simultaneously during certain periods of time along with other framework threads which will be launched for various house-keeping tasks.

The goal of Mapper instance, along with the corresponding Partitioner and Combiner instances, is to produce partitions (files), one per Reducer.

A MapReduce program has two main phases

- Map

- Reduce

The Map Phase

As a developer they key interaction you will have with the Hadoop framework is when you make the context.write(…) invocation. See an example invocation from a sample WordCountMapper used in the

public static class WordCountMapper

extends Mapper<LongWritable, Text, Text, IntWritable> {

public void map(LongWritable key, Text value, Context context) throws IOException,

InterruptedException {

String w = value.toString();

context.write(new Text(w), new IntWritable(1));

}

}

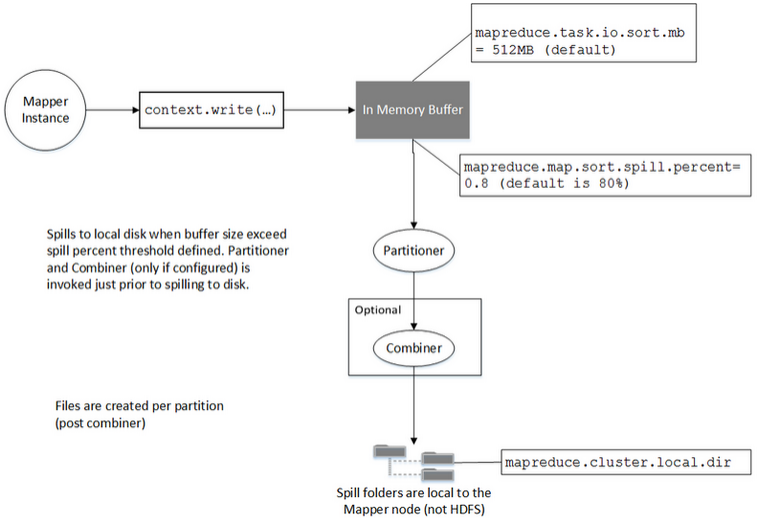

This is the invocation where the key and value pairs emitted by the Mapper are sent to the Reducer instance, which is on a separate JVM, and typically on a separate node. In this section, we will review what happens behind the scenes when you make this call. Figure below shows what happens on the Mapper node when you invoke this method

The goal of the Mapper is to produce a partitioned file sorted by the Mapper output keys. The partitioning is with respect to the reducers the keys are meant to be processed by. After the Mapper emits its key and value pair, they are fed to a Partitioner instance that runs in the same JVM as the Mapper. The Partitioner partitions the Mapper output, based on the number of Reducers and any custom partitioning logic. The results are then sorted by the Mapper output key.

At this point, the Combiner is invoked (if a Combiner is configured for the job) on the sorted output. Note that the Combiner is invoked after the Partitioner in the same JVM as the Mapper.

Finally this partitioned, sorted and combined output is spilled to the disk. Optionally the Mapper intermediate outputs can be compressed. Compression reduces the I/O as these Mapper output files are written the disk on the Mapper node. Compression also reduces the network I/O as these compressed files are transferred to the reducer nodes.

Apply for Big Data and Hadoop Developer Certification

https://www.vskills.in/certification/certified-big-data-and-apache-hadoop-developer

Stay Ahead with the Power of Upskilling - Invest in Yourself!

Stay Ahead with the Power of Upskilling - Invest in Yourself!