A/B testing is also known as split testing. In AB testing, we create and analyze two variants of an application in order to find which variant performs better in terms of user experience, leads, conversions or any other xyz goal and then eventually keeping the better performing variant.

Running an AB test that directly compares a variation against a current experience lets you ask focused questions about changes to your website or app, and then collect data about the impact of that change.

Testing takes the guesswork out of website optimization and enables data-informed decisions that shift business conversations from “we think” to “we know.” By measuring the impact that changes have on your metrics, you can ensure that every change produces positive results.

How A/B Testing Works

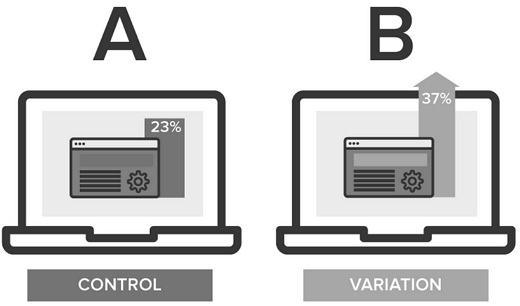

In an A/B test, you take a webpage or app screen and modify it to create a second version of the same page. This change can be as simple as a single headline or button, or be a complete redesign of the page. Then, half of your traffic is shown the original version of the page (known as the control) and half are shown the modified version of the page (the variation).

Let’s try to understand it with an example. Suppose we have an e-commerce website selling electronic goods to the customers directly. Currently on the listing page, 3 devices are displayed on each row with only necessary details like image, name, brand and price of the device. Clicking on the image leads to the device description page containing all the details and a buy button.

Now, the product team comes up with an idea to change the UI and display only one product in each row with all the device details along with a buy button. The current UI allows user to navigate more devices at a glance but user have to perform an additional click to see device details and buy option. The new proposed UI provides user the opportunity to get all details of device on the listing page itself along with the buying option.

So, basically we will have two variants of the application variantA and variantB-

No matter how much analysis is done, releasing the new UI would be a big change and might backfire. So, in this case, we can use A/B testing. We will create the variantB UI and release it to some percentage of users. For example – we may distribute user in the ratio of 50:50 or 80:20 between the two variants- A and B. After that, over period of time, we will observe the performance of the two variants and then determine which to chose and roll out the same to all the users.

In this way, AB testing helps in making the decision of choosing the better variant of the application.

Why to do A/B Test

A/B testing allows individuals, teams, and companies to make careful changes to their user experiences while collecting data on the results. This allows them to construct hypotheses, and to learn better why certain elements of their experiences impact user behavior. In another way, they can be proven wrong—their opinion about the best experience for a given goal can be proven wrong through an A/B test.

More than just answering a one-off question or settling a disagreement, AB testing can be used consistently to continually improve a given experience, improving a single goal like conversion rate over time.

For instance, a B2B technology company may want to improve their sales lead quality and volume from campaign landing pages. In order to achieve that goal, the team would try A/B testing changes to the headline, visual imagery, form fields, call to action, and overall layout of the page.

Testing one change at a time helps them pinpoint which changes had an effect on their visitors’ behavior, and which ones did not. Over time, they can combine the effect of multiple winning changes from experiments to demonstrate the measurable improvement of the new experience over the old one.