Virtualization allows multiple operating system instances to run concurrently on a single computer; it is a means of separating hardware from a single operating system. Each “guest” OS is managed by a Virtual Machine Monitor (VMM), also known as a hypervisor. Because the virtualization system sits between the guest and the hardware, it can control the guests’ use of CPU, memory, and storage, even allowing a guest OS to migrate from one machine to another.

As virtualization disentangles the operating system from the hardware, a number of very useful new tools become available. Virtualization allows an operator to control a guest operating system’s use of CPU, memory, storage, and other resources, so each guest receives only the resources that it needs. This distribution eliminates the danger of a single runaway process consuming all available memory or CPU. It also helps IT staff to satisfy service level requirements for specific applications.

Since the guest is not bound to the hardware, it also becomes possible to dynamically move an operating system from one physical machine to another. As a particular guest OS begins to consume more resources during a peak period, operators can move the offending guest to another server with less demand. This kind of flexibility changes traditional notions of server provisioning and capacity planning. With virtualized deployments, it is possible to treat computing resources like CPU, memory, and storage as a hangar of resources and applications can easily relocate to receive the resources they need at that time.

Approaches

The x86 architecture of Intel and AMD are very popular among IT industries and holds a very important place in virtual machine complier as most of the compilers are x86 architecture based only. Various virtualization types are

Hardware virtualization – Hardware virtualization or platform virtualization refers to the creation of a virtual machine that acts like a real computer with an operating system. Software executed on these virtual machines is separated from the underlying hardware resources. For example, a computer that is running Microsoft Windows may host a virtual machine that looks like a computer with the Ubuntu Linux operating system; Ubuntu-based software can be run on the virtual machine.

In hardware virtualization, the host machine is the actual machine on which the virtualization takes place, and the guest machine is the virtual machine. The words host and guest are used to distinguish the software that runs on the actual machine from the software that runs on the virtual machine. The software or firmware that creates a virtual machine on the host hardware is called a hypervisor or Virtual Machine Manager. Different types of hardware virtualization include

- Full virtualization: Almost complete simulation of the actual hardware to allow software, which typically consists of a guest operating system, to run unmodified.

- Partial virtualization: Some but not all of the target environment is simulated. Some guest programs, therefore, may need modifications to run in this virtual environment.

- Paravirtualization: A hardware environment is not simulated; however, the guest programs are executed in their own isolated domains, as if they are running on a separate system. Guest programs need to be specifically modified to run in this environment.

Hardware-assisted virtualization is a way of improving the efficiency of hardware virtualization. It involves employing specially designed CPUs and hardware components that help improve the performance of a guest environment.

Hardware virtualization is not the same as hardware emulation. In hardware emulation, a piece of hardware imitates another, while in hardware virtualization, a hypervisor (a piece of software) imitates a particular piece of computer hardware or the entire computer. Furthermore, a hypervisor is not the same as an emulator; both are computer programs that imitate hardware, but their domain of use in language differs. Various hardware virtualization technologies are

Full Virtualization – In full virtualization technique, the underlying software which runs a guest OS provide the complete hardware support to guest OS from the existing physical machine. In case the physical machine is not having some hardware compatible then it’s the load the full virtualization technique to implement the proper working of guest OS using some simulation technique. The full virtualization technique provides the complete environment to run a guest OS on physical machine.

The full virtualization technology assures that every hardware feature must reflect in virtual machine which includes input/output, instructions set, interrupts, memory address blocks and every single feature of physical machine. Therefore, using full virtualization technique one can run any dedicated software on guest operating system, even that software which requires primary recourse of an operating system. Advantages of full virtualization are

- Sharing of single physical machine (or resources) to run multiple OS. This is very important for IT companies while developing cross platform applications. It saves the hardware cost. As a application developed in Linux environment needs to test on Unix, Windows and Mac environment will require four physical machine to run four different operating system. But in case of Virtual Machine, a single physical machine can run all the operating system.

- Control over user as the administrative task using virtual machine becomes easily manageable. Moreover, using virtual operating system, administer can restrict user to use a particular guest OS only and can establish communication with other operating system.

Partial virtualization – In partial virtualization technique, the underlying software (virtual machine) which runs a guest OS provides the partial hardware support to guest OS from the existing physical machine. The partial hardware resource usually includes memory address space, shared disk space, network resources etc. This means the full operating system (guest) cannot run completely on virtual machine but most of the part of operating system can run. Due to this fact, some of the applications which require core OS features may not be able to run on guest operating system, but most of the application runs fluently on guest operating system. For the guest operating system environment support, the host operating system requires the reallocation of memory address.

Advantages of Partial Virtualization are

Easier to implement. Time is a very important factor for the cost estimation of a project in IT industries. Since the partial virtualization technique is easier to implement, it saves a lot of development cost.

- Capable of supporting most of the operating system applications. Although full virtualization technique implement full guest OS support but it require lot of memory space from physical machine. In case of partial virtualization technique, the hardware requirement from physical machine is low as compare to full virtualization.

- Can run on first generation real time sharing operating system.

- The drawback of partial virtualization is only compatibly issue with guest OS.

Paravirtualization – In paravirtualization technology, the guest operating is recompiled to modify some of the APIs of guest operating system to eliminate the need to translation of commands into binary. Thus, in short the kernel of operating system is modified to resolve the compatibility issue which is encountered in partial virtualization.

The paravirtualization can be easily implemented on open source operating system like Linux and OpenBSD. But the most widely operating system, i.e. Microsoft Windows cannot be modified as it is the closed source operating system. Also with existing functional operating system, it is impossible to modify the kernel.

Server virtualization – Server virtualization is the masking of server resources (including the number and identity of individual physical servers, processors, and operating systems) from server users. The intention is to spare the user from having to understand and manage complicated details of server resources while increasing resource sharing and utilization and maintaining the capacity to expand later. Server virtualization technologies help to

- Better utilize new and existing servers

- Reclaim valuable floor space

- Reduce server energy consumption and cooling requirements

- Simplify maintenance and management tasks

- Improve reliability and availability to support higher service levels

Server virtualization drives up utilization rates and drives down costs, but it also helps to achieve the flexibility needed to pursue competitive advantage. There are three ways to create virtual servers: full virtualization, para-virtualization and OS-level virtualization. They all share a few common traits. The physical server is called the host. The virtual servers are called guests. The virtual servers behave like physical machines. Each system uses a different approach to allocate physical server resources to virtual server needs.

There are two big network problems associated with server virtualization. The first is configuring virtual LANs. Network administrators need to make sure the VLAN used by the virtual machine (VM) is assigned to the same switch port as the physical server running the VM. The second problem is assigning QoS and enforcing network policies, such as access control lists (ACLs). Traditionally this is done in the network switch connected to the server running the application. With server virtualization there’s a software switch running under the hypervisor in the physical server — not the traditional physical network switch that connects to the physical server.

Virtualization is the perfect solution for applications that are meant for small- to medium-scale usage. Virtualization should not be used for high-performance applications where one or more servers need to be clustered together to meet performance requirements of a single application because the added overhead and complexity would only reduce performance. We’re essentially taking a 12 GHz server (four cores times three GHz) and chopping it up into 16 750 MHz servers. But if eight of those servers are in off-peak or idle mode, the remaining eight servers will have nearly 1.5 GHz available to them.

Desktop virtualization – Desktop virtualization is the concept of separating the logical desktop from the physical machine.

One form of desktop virtualization, virtual desktop infrastructure (VDI), can be thought as a more advanced form of hardware virtualization. Rather than interacting with a host computer directly via a keyboard, mouse, and monitor, the user interacts with the host computer using another desktop computer or a mobile device by means of a network connection, such as a LAN, Wireless LAN or even the Internet. In addition, the host computer in this scenario becomes a server computer capable of hosting multiple virtual machines at the same time for multiple users.

As organizations continue to virtualize and converge their data center environment, client architectures also continue to evolve in order to take advantage of the predictability, continuity, and quality of service delivered by their Converged Infrastructure. For example, companies like HP and IBM provide a hybrid VDI model with a range of virtualization software and delivery models to improve upon the limitations of distributed client computing. Selected client environments move workloads from PCs and other devices to data center servers, creating well-managed virtual clients, with applications and client operating environments hosted on servers and storage in the data center. For users, this means they can access their desktop from any location, without being tied to a single client device. Since the resources are centralized, users moving between work locations can still access the same client environment with their applications and data. For IT administrators, this means a more centralized, efficient client environment that is easier to maintain and able to more quickly respond to the changing needs of the user and business.

Another form, session virtualization, allows multiple users to connect and log into a shared but powerful computer over the network and use it simultaneously. Each is given a desktop and a personal folder in which they store their files. With Multiseat configuration, session virtualization can be accomplished using a single PC with multiple monitors keyboards and mice connected.

Thin clients, which are seen in desktop virtualization, are simple and/or cheap computers that are primarily designed to connect to the network. They may lack significant hard disk storage space, RAM or even processing power, but many organizations are beginning to look at the cost benefits of eliminating “thick client” desktops that are packed with software (and require software licensing fees) and making more strategic investments.

Moving virtualised desktops into the cloud creates hosted virtual desktops (HVD), where the desktop images are centrally managed and maintained by a specialist hosting firm. Benefits include scalability and the reduction of capital expenditure, which is replaced by a monthly operational cost.

Software virtualization – Operating system-level virtualization, hosting of multiple virtualized environments within a single OS instance. Application virtualization and workspace virtualization, the hosting of individual applications in an environment separated from the underlying OS. Application virtualization is closely associated with the concept of portable applications.

Service virtualization, emulating the behavior of dependent (e.g., third-party, evolving, or not implemented) system components that are needed to exercise an application under test (AUT) for development or testing purposes. Rather than virtualizing entire components, it virtualizes only specific slices of dependent behavior critical to the execution of development and testing tasks.

Memory virtualization – Memory virtualization, aggregating RAM resources from networked systems into a single memory pool. Virtual memory, giving an application programs the impression that it has contiguous working memory, isolating it from the underlying physical memory implementation.

Storage virtualization – Storage virtualization, the process of completely abstracting logical storage from physical storage. Storage virtualization is the pooling of physical storage from multiple network storage devices into what appears to be a single storage device that is managed from a central console. Storage virtualization is commonly used in storage area networks (SANs).

Data virtualization – Data virtualization, the presentation of data as an abstract layer, independent of underlying database systems, structures and storage.

Database virtualization, the decoupling of the database layer, which lies between the storage and application layers within the application stack over all.

Network virtualization – Network virtualization, creation of a virtualized network addressing space within or across network subnets. Network virtualization is a method of combining the available resources in a network by splitting up the available bandwidth into channels, each of which is independent from the others, and each of which can be assigned (or reassigned) to a particular server or device in real time. The idea is that virtualization disguises the true complexity of the network by separating it into manageable parts, much like your partitioned hard drive makes it easier to manage your files.

Components

The purpose of virtualization is to enable a rich experience for users through a central, virtualized back end that hosts virtual machines (VMs). We analyze an virtual desktop infrastructure(VDI) for it’s components. VDI lets each user connects via RDP to a client OS such as Windows XP or Windows Vista running as a VM on a server. For this infrastructure to function, a VDI solution must have the following elements

- A virtualization platform. The platform hosts VMs with the client operating systems. The platform must have the capacity to host enough VMs for all concurrently connected users. Examples of virtualization platforms include Hyper-V and VMware ESX Server.

- A protocol for the users to connect to the virtualized OS. This protocol could be the RDP protocol that’s part of XP and Vista or an add-on protocol. The protocol will also handle certain features such as device and printer redirection. Your decision about a protocol will depend on the device end users use to connect, such as a thin client or a remote client under a full OS.

- A virtual management platform. This platform manages the servers and helps provision VMs quickly and efficiently. This platform not only creates VMs, but also uses templates and libraries of disk images to provision the client OSs in the VMs. The virtual management platform ensures there is always a pool of VMs available for new connections.

- A session broker. The session broker is responsible for distributing sessions from clients to VMs and redirecting users of disconnected sessions back to their original VMs. Windows Server 2008 R2 will provide a session broker for VDI. Also, Microsoft has partnered with Citrix to allow use of XenDesktop as a session broker for Microsoft VDI. VMware uses VMware View Manager as its preferred session broker.

- Application virtualization. Application virtualization enables fast availability of applications to the virtual client OSs. Standard application installation methods can be used, such as deploying via MSI files, but the install time may be several minutes. This wait is undesirable and leads to a poor end user experience and a loss of productivity while users wait for the application to install. Solutions for application virtualization include Microsoft Application Virtualization and VMware Thin App.

- Profile and data redirection. Users customize their environments and it’s vital that these customizations and configuration are maintained between connections. Profile and data redirection ensure that if a user switches between VMs they have a consistent environment. It’s also important that any data the user stores, including folders such as Documents, is stored on a server, and that’s another task for profile and data redirection.

- Client devices. These devices are the point of access and could be thin clients or clients running software on OSs such as Windows, Linux, or others supported by the VDI solution.

Hypervisor

From the beginning we are using a term frequently and it is “virtual machine”. But in actual, “virtual machines” are referred as “Hypervisor”. A hypervisor is a simulator, which runs on host operating system and provides necessary resources to guest operating system. A hypervisor could be software, hardware and firmware or may be the combination of these three components. The hypervisor used to provide platform to the guest operating system and manages the process and their executions.

Hypervisor, also called virtual machine manager (VMM), is one of many hardware virtualization techniques allowing multiple operating systems, termed guests, to run concurrently on a host computer. It is so named because it is conceptually one level higher than a supervisory program. The hypervisor presents to the guest operating systems a virtual operating platform and manages the execution of the guest operating systems. Multiple instances of a variety of operating systems may share the virtualized hardware resources. Hypervisors are very commonly installed on server hardware, with the function of running guest operating systems, that themselves act as servers.

A hypervisor, also called a virtual machine manager, is a program that allows multiple operating systems to share a single hardware host. Each operating system appears to have the host’s processor, memory, and other resources all to itself. However, the hypervisor is actually controlling the host processor and resources, allocating what is needed to each operating system in turn and making sure that the guest operating systems (called virtual machines) cannot disrupt each other.

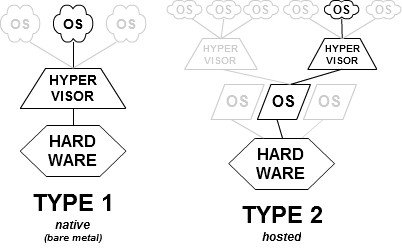

Hypervisor Types

- Type 1 (or native, bare metal) hypervisors run directly on the host’s hardware to control the hardware and to manage guest operating systems. A guest operating system thus runs on another level above the hypervisor. This model represents the classic implementation of virtual machine architectures; the original hypervisors were the test tool, SIMMON, and CP/CMS, both developed at IBM in the 1960s. CP/CMS was the ancestor of IBM’s z/VM. Modern equivalents of this are Oracle VM Server for SPARC, the Citrix XenServer, KVM, VMware ESX/ESXi, and Microsoft Hyper-V hypervisor.

- Type 2 (or hosted) hypervisors run within a conventional operating system environment. With the hypervisor layer as a distinct second software level, guest operating systems run at the third level above the hardware. VMware Workstation and VirtualBox are examples of Type 2 hypervisors. In other words, Type 1 hypervisor runs directly on the hardware; a Type 2 hypervisor runs on another operating system, such as FreeBSD, Linux or Windows.

Virtualization Products

KVM – KVM (Kernel-based Virtual Machine) is a full virtualization solution for Linux on AMD64 and Intel 64 hardware that is built into the standard Red Hat Enterprise Linux 7 kernel and can run multiple guest operating systems. The KVM hypervisor in Red Hat Enterprise Linux is managed with the libvirt API and tools built for libvirt (such as virt-manager and virsh). Virtual machines are executed and run as multi-threaded Linux processes controlled by these tools.

Features of KVM

- Overcommitting – The KVM hypervisor supports overcommitting of system resources. Overcommitting means allocating more virtualized CPUs or memory than the available resources on the system. Memory overcommitting allows hosts to utilize memory and virtual memory to increase guest densities.

- Thin provisioning – Thin provisioning allows the allocation of flexible storage and optimizes the available space for every guest virtual machine. It gives the appearance that there is more physical storage on the guest than is actually available. This is not the same as overcommitting as this only pertains to storage and not CPUs or memory allocations.

- KSM – Kernel Same-page Merging (KSM), used by the KVM hypervisor, allows KVM guests to share identical memory pages. These shared pages are usually common libraries or other identical, high-use data. KSM allows for greater guest density of identical or similar guest operating systems by avoiding memory duplication.

- QEMU Agent – The QEMU Guest Agent runs on the guest operating system and allows the host machine to issue commands to the guest operating system.

- Disk I/O throttling – When several virtual machines are running simultaneously, they can interfere with system performance by using excessive disk I/O. Disk I/O throttling in KVM provides the ability to set a limit on disk I/O requests sent from virtual machines to the host machine. This can prevent a virtual machine from over utilizing shared resources, and impacting the performance of other virtual machines.

- Automatic NUMA balancing – Automatic NUMA balancing improves the performance of applications running on non-uniform memory access (NUMA) hardware systems, without any manual tuning required for Linux guests. Automatic NUMA balancing moves tasks, which can be threads or processes, closer to the memory they are accessing

- Virtual CPU hot add – Virtual CPU (vCPU) hot add capability provides the ability to increase processing power as needed on running virtual machines, without downtime. The vCPUs assigned to a virtual machine can be added to a running guest in order to either meet the workload’s demands, or to maintain the Service Level Agreement (SLA) associated with the workload.

libvirt and libvirt tools – The libvirt package is a hypervisor-independent virtualization API that is able to interact with the virtualization capabilities of a range of operating systems.

The libvirt package provides:

- A standard, generic, and stable virtualization layer to securely manage virtual machines on a host.

- A standard interface for managing local systems and networked hosts.

- All of the APIs required to provision, create, modify, monitor, control, migrate, and stop virtual machines, but only if the hypervisor supports these operations. Although multiple hosts may be accessed with libvirt simultaneously, the APIs are limited to single node operations.

libvirt focuses on managing single hosts and provides APIs to enumerate, monitor and use the resources available on the managed node, including CPUs, memory, storage, networking and Non-Uniform Memory Access (NUMA) partitions. The management tools can be located on separate physical machines from the host using secure protocols.

Red Hat Enterprise Linux 7 supports libvirt and includes libvirt-based tools as its default method for virtualization management (as in Red Hat Enterprise Virtualization Management).

The libvirt package is available as free software under the GNU Lesser General Public License. The libvirt project aims to provide a long term stable C API to virtualization management tools, running on top of varying hypervisor technologies. Tools of t he libvirt package are

- virsh – The virsh command-line tool is built on the libvirt management API and operates as an alternative to the graphical virt-manager application. The virsh command can be used in read-only mode by unprivileged users or, with root access, full administration functionality. The virsh command is ideal for scripting virtualization administration and provides many functions such as installing, listing, starting, and stopping virtual machines.

- virt-manager – virt-manager is a graphical desktop tool for managing virtual machines. It allows access to graphical guest consoles and can be used to perform virtualization administration, virtual machine creation, migration, and configuration tasks. The ability to view virtual machines, host statistics, device information and performance graphs is also provided. The local hypervisor and remote hypervisors can be managed through a single interface.

QEMU – QEMU is a generic and open source machine emulator and virtualizer. It can emulate a completely different machine architecture from the one on which it is running (e.g., emulating an ARM architecture on an x86 platform). The code for QEMU is mature and well tested, and as such, it is relied upon by many other virtualization platforms and projects.

When used as a machine emulator, QEMU can run OSes and programs made for one machine (e.g. an ARM board) on a different machine (e.g. your own PC). By using dynamic translation, it achieves very good performance.

When used as a virtualizer, QEMU achieves near native performance by executing the guest code directly on the host CPU. QEMU supports virtualization when executing under the Xen hypervisor or using the KVM kernel module in Linux. When using KVM, QEMU can virtualize x86, server and embedded PowerPC, and S390 guests.

QEMU can use other hypervisors like Xen or KVM to use CPU extensions (HVM) for virtualization. When used as a virtualizer, QEMU achieves near native performances by executing the guest code directly on the host CPU.

Xen – This is a popular virtualization implementation, with a large community following. The code base is quite mature and well tested. It supports both the full and paravirtualization methods of virtualization. Xen is considered a high-performing virtualization platform. Xen Project runs in a more privileged CPU state than any other software on the machine.

Responsibilities of the hypervisor include memory management and CPU scheduling of all virtual machines (“domains”), and for launching the most privileged domain (“dom0”) – the only virtual machine which by default has direct access to hardware. From the dom0 the hypervisor can be managed and unprivileged domains (“domU”) can be launched.

The dom0 domain is typically a version of Linux or BSD. User domains may either be traditional operating systems, such as Microsoft Windows under which privileged instructions are provided by hardware virtualization instructions (if the host processor supports x86 virtualization, e.g., Intel VT-x and AMD-V), or para-virtualized operating systems whereby the operating system is aware that it is running inside a virtual machine, and so makes hypercalls directly, rather than issuing privileged instructions.

Xen Project boots from a bootloader such as GNU GRUB, and then usually loads a paravirtualized host operating system into the host domain (dom0).

Xen virtualization types – Xen supports five different approaches to running the guest operating system: HVM (hardware virtual machine), HVM with PV drivers, PVHVM (HVM with PVHVM drivers), PVH (PV in an HVM container) and PV (paravirtualization).

Paravirtualization – modified guests

Xen supports a form of virtualization known as paravirtualization, in which guests run a modified operating system. The guests are modified to use a special hypercall ABI, instead of certain architectural features.

Through paravirtualization, Xen can achieve high performance even on its host architecture (x86) which has a reputation for non-cooperation with traditional virtualization techniques. Xen can run paravirtualized guests (“PV guests” in Xen terminology) even on CPUs without any explicit support for virtualization.

Paravirtualization avoids the need to emulate a full set of hardware and firmware services, which makes a PV system simpler to manage and reduces the attack surface exposed to potentially malicious guests. On 32-bit x86, the Xen host kernel code runs in Ring 0, while the hosted domains run in Ring 1 (kernel) and Ring 3 (applications).

Hardware-assisted virtualization, allowing for unmodified guests – CPUs that support virtualization make it possible to support unmodified guests, including proprietary operating systems (such as Microsoft Windows). This is known as hardware-assisted virtualization, however in Xen this is known as hardware virtual machine (HVM).

HVM extensions provide additional execution modes, with an explicit distinction between the most-privileged modes used by the hypervisor with access to the real hardware (called “root mode” in x86) and the less-privileged modes used by guest kernels and applications with “hardware” accesses under complete control of the hypervisor (in x86, known as “non-root mode”; both root and non-root mode have Rings 0–3).

Both Intel and AMD have contributed modifications to Xen to support their respective Intel VT-x and AMD-V architecture extensions. Support for ARM v7A and v8A virtualization extensions came with Xen 4.3.

HVM extensions also often offer new instructions to support direct calls by a paravirtualized guest/driver into the hypervisor, typically used for I/O or other operations needing high performance. These allow HVM guests with suitable minor modifications to gain many of the performance benefits of paravirtualised I/O.

In current versions of Xen (up to 4.2) only fully virtualised HVM guests can make use of hardware support for multiple independent levels of memory protection and paging. As a result, for some workloads, HVM guests with PV drivers (also known as PV-on-HVM, or PVH) provide better performance than pure PV guests.

Xen HVM has device emulation based on the QEMU project to provide I/O virtualization to the virtual machines. The system emulates hardware via a patched QEMU “device manager” (qemu-dm) daemon running as a backend in dom0. This means that the virtualized machines see an emulated version of a fairly basic PC. In a performance-critical environment, PV-on-HVM disk and network drivers are used during normal guest operation, so that the emulated PC hardware is mostly used for booting.

User-Mode Linux (UML) – This is one of the earliest virtualization implementations for Linux. As the name implies, virtualization is implemented entirely in user space. This singular attribute gives it the advantage of being quite secure, since its components run in the context of a regular user. Running entirely in user space also gives this implementation the disadvantage of not being very fast.

UML (User Mode Linux) is sort of like an emulator. It’s a tiny little self-contained Linux system that runs as a normal user process.

UML is a standard Linux kernel configured to run as a normal user process. Instead of booting up and talking directly to hardware, it runs as a process and its device drivers use the C library and standard system calls to ask the parent system for anything (memory, network, disk I/O) it needs. (The UML process then hands out memory and timeslices to any child processes it starts, and handles all their system calls.)

In UML environments, host and guest kernel versions need not match, so it is entirely possible to test a “bleeding edge” version of Linux in User-mode on a system running a much older kernel. UML also allows kernel debugging to be performed on one machine, where other kernel debugging tools (such as kgdb) require two machines connected with a null modem cable.

The UML guest application (a Linux binary ELF) was originally available as a patch for some Kernel versions above 2.2.x, and the host with any kernel version above 2.2.x supported it easily in the thread mode (i.e., non-SKAS3).

As of Linux 2.6.0, it is integrated into the main kernel source tree. A method of running a separate kernel address space (SKAS) that does not require host kernel patching has been implemented. This improves performance and security over the old Traced Thread approach, in which processes running in the UML share the same address space from the host’s point of view, which leads the memory inside the UML to not be protected by the memory management unit. Unlike the current UML using SKAS, buggy or malicious software inside a UML running on a non-SKAS host could be able to read the memory space of other UML processes or even the UML kernel memory.

Virtualization Tools

- virsh – virsh is a command-line interface (CLI) tool for managing the hypervisor and guest virtual machines. The virsh command-line tool is built on the libvirt management API and operates as an alternative to the qemu-kvm command and the graphical virt-manager application. The virsh command can be used in read-only mode by unprivileged users or, with root access, full administrative functionality. The virsh command is ideal for scripting virtualization administration. In addition the virsh tool is a main management interface for virsh guest domains and can be used to create, pause, and shut down domains, as well as list current domains. This tool is installed as part of the libvirt-client package.

- virt-manager – virt-manager is a lightweight graphical tool for managing virtual machines. It provides the ability to control the life cycle of existing machines, provision new machines, manage virtual networks, access the graphical console of virtual machines, and view performance statistics. This tool is provided by its own package called virt-manager.

- virt-install – virt-install is a command-line tool to provision new virtual machines. It supports both text-based and graphical installations, using serial console, SPICE, or VNC client/server pair graphics. Installation media can be local, or exist remotely on an NFS, HTTP, or FTP server. The tool can also be configured to run unattended and use the kickstart method to prepare the guest, allowing for easy automation of installation. This tool is included in the virt-install package.

- guestfish – guestfish is a shell and command-line tool for examining and modifying virtual machine disk images. This tool uses libguestfs and exposes all functionality provided by the guestfs API.

The following tools are used to access a guest virtual machine’s disk via the host. The guest’s disk is usually accessed directly via the disk-image file located on the host. However, it is sometimes possible to gain access via the libvirt domain. The commands that follow are part of the libvirt domain and are used to access the guest’s disk image.

- guestmount – A command-line tool used to mount virtual machine file systems and disk images on the host machine. This tool is installed as part of the libguestfs-tools-c package.

- virt-builder – A command-line tool for quickly building and customizing new virtual machines. This tool is installed in Red Hat Enterprise Linux 7.1 and later as part of the libguestfs-tools package.

- virt-cat – A command-line tool that can be used to quickly view the contents of one or more files in a specified virtual machine’s disk or disk image. This tool is installed as part of the libguestfs-tools package.

- virt-customize – A command-line tool for customizing virtual machine disk images. virt-customize can be used to install packages, edit configuration files, run scripts, and set passwords. This tool is installed in Red Hat Enterprise Linux 7.1 and later as part of the libguestfs-tools package.

- virt-df – A command-line tool used to show the actual physical disk usage of virtual machines, similar to the command-line tool df. Note that this tool does not work across remote connections. It is installed as part of the libguestfs-tools package.

- virt-diff – A command-line tool for showing differences between the file systems of two virtual machines, for example, to discover which files have changed between snapshots. This tool is installed in Red Hat Enterprise Linux 7.1 and later as part of the libguestfs-tools package.

- virt-edit – A command-line tool used to edit files that exist on a specified virtual machine. This tool is installed as part of the libguestfs-tools package.

- virt-filesystems – A command-line tool used to discover file systems, partitions, logical volumes and their sizes in a disk image or virtual machine. One common use is in shell scripts, to iterate over all file systems in a disk image. This tool is installed as part of the libguestfs-tools package. This tool replaces virt-list-filesystems and virt-list-partitions.

- virt-inspector – A command-line tool that can examine a virtual machine or disk image to determine the version of its operating system and other information. It can also produce XML output, which can be piped into other programs. Note that virt-inspector can only inspect one domain at a time. This tool is installed as part of the libguestfs-tools package.

- virt-log – A command-line tool for listing log files from virtual machines. This tool is installed in Red Hat Enterprise Linux 7.1 and later as part of the libguestfs-tools package.

- virt-ls – A command-line tool that lists files and directories inside a virtual machine. This tool is installed as part of the libguestfs-tools package.

- virt-make-fs – A command-line tool for creating a file system based on a tar archive or files in a directory. It is similar to tools like mkisofs and mksquashfs, but it can create common file system types such as ext2, ext3 and NTFS, and the size of the file system created can be equal to or greater than the size of the files it is based on. This tool is provided as part of the libguestfs-tools package.

- virt-rescue – A command-line tool that provides a rescue shell and some simple recovery tools for unbootable virtual machines and disk images. It can be run on any virtual machine known to libvirt, or directly on disk images. This tool is installed as part of the libguestfs-tools package.

- virt-resize – A command-line tool to resize virtual machine disks, and resize or delete any partitions on a virtual machine disk. It works by copying the guest image and leaving the original disk image untouched. This tool is installed as part of the libguestfs-tools package.

- virt-viewer – A minimal tool for displaying the graphical console of a virtual machine via the VNC and SPICE protocols. This tool is provided by its own package: virt-viewer.

- virt-what – A shell script that detects whether a program is running in a virtual machine. This tool is provided by its own package: virt-what.

Using KVM

Requirements – To set up a KVM virtual machine on Red Hat Enterprise 7, your system must meet several criteria:

Architecture – On certain architectures, KVM virtualization is not supported with Red Hat Enterprise Linux

Disk space and RAM – For virtualization to work on your system, you require at minimum:

- 6 GB free disk space;

- 2 GB of RAM.

Required packages for virtualization – Before you can use virtualization, a basic set of virtualization packages must be installed on your computer. The virtualization packages can be installed either during the host installation sequence, or after host installation using the yum command.

To use virtualization on Red Hat Enterprise Linux, you require at minimum the qemu-kvm, qemu-img, and libvirt packages. These provide the user-level KVM emulator, disk image manager, and virtualization management tools.

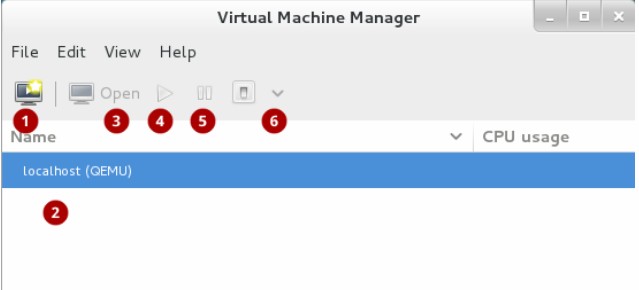

The Virtual Machine Manager, also known as virt-manager, is a graphical tool for quickly deploying virtual machines in Red Hat Enterprise Linux. Open the Virtual Machine Manager application from the Applications menu and System Tools submenu. The following image shows the Virtual Machine Manager interface. This interface allows you to control all of your virtual machines from one central location:

Commonly-used functions include:

- Create new virtual machine: Start here to create a new virtual machine.

- Virtual machines: A list of all virtual machines, or guests. When a virtual machine is created, it will be listed here. When a guest is running, an animated graph shows the guest’s CPU usage under CPU usage. After selecting a virtual machine from this list, use the following buttons to control the selected virtual machine’s state:

- Open: Opens the guest virtual machine console and details in a new window.

- Run: Turns on the virtual machine.

- Pause: Pauses the virtual machine.

- Shut down: Shuts down the virtual machine. Clicking on the arrow displays a dropdown menu with several options for turning off the virtual machine, including Reboot, Shut Down, Force Reset, Force Off, and Save.

Creating a guest virtual machine with Virtual Machine Manager

- Open Virtual Machine Manager. Launch the Virtual Machine Manager application from the Applications menu and System Tools submenu.

- Create a new virtual machine. Click the Create a new virtual machine button

- Specify the installation method. Start the creation process by choosing the method of installing the new virtual machine.

- Locate installation media. Provide the location of the ISO that the guest virtual machine will install the operating system from. Select the OS type and Version of the installation, ensuring that you select the appropriate OS type for your virtual machine. For this example, select Linux from the dropdown in OS type and choose Red Hat Enterprise Linux 7 from the dropdown in Version, and click the Forward button. Note that you can also let the Virtual Machine Manager automatically detect the operating system by clicking the respective checkbox.

- Configure memory and CPU. Select the amount of memory and the number of CPUs to allocate to the virtual machine. The wizard shows the number of CPUs and amount of memory available to allocate.

- Configure storage. Assign storage to the guest virtual machine. The wizard shows options for storage, including where to store the virtual machine on the host machine. For this tutorial, select the default settings and click the Forward button.

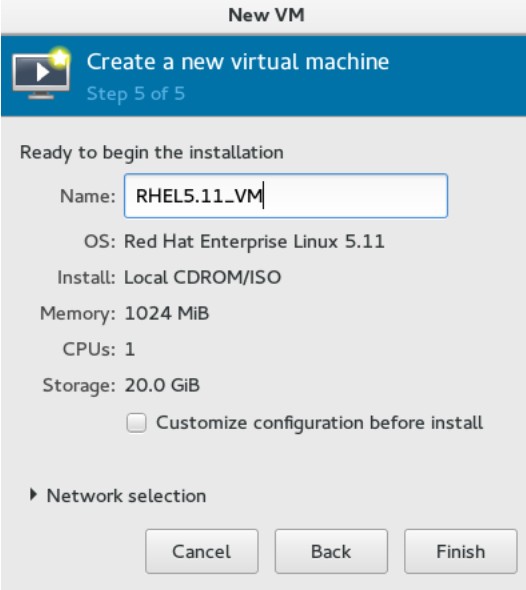

- Naming and final overview. Finally, select a name for the virtual machine, verify the settings, and click the Finish button. Virtual Machine Manager will then create a virtual machine with your specified hardware settings.

- After Virtual Machine Manager has created your Red Hat Enterprise Linux 7 virtual machine, follow the instructions in the Red Hat Enterprise Linux 7 installer to complete the installation of the virtual machine’s operating system.

You can view a virtual machine’s console by selecting a virtual machine in the Virtual Machine Manager window and clicking Open. You can operate your Red Hat Enterprise Linux 7 virtual machine from the console in the same way as a physical system.

Apply for Linux Administration Certification Now!!

http://www.vskills.in/certification/Certified-Linux-Administrator

Stay Ahead with the Power of Upskilling - Invest in Yourself!

Stay Ahead with the Power of Upskilling - Invest in Yourself!