HDFS Interfaces

Most Hadoop filesystem interactions are mediated through the Java API. The filesystem shell, is a Java application using the Java FileSystem class.

HTTP

Hortonworks developed an additional API to support requirements based on standard REST functionalities. The HTTP REST API exposed by the WebHDFS protocol makes it easier for other languages to interact with HDFS. The HTTP interface is slower than the native Java client, so should be avoided for very large data transfers if possible. There are two ways of accessing HDFS over HTTP: directly, where the HDFS daemons serve HTTP requests to clients; and via a proxy (or proxies), which accesses HDFS on the client’s behalf using the usual DistributedFileSystem API. Both use the WebHDFS protocol.

WebHDFS concept is based on HTTP operations like GET, PUT, POST and DELETE. Operations like OPEN, GETFILESTATUS, LISTSTATUS are using HTTP GET, others like CREATE, MKDIRS, RENAME, SETPERMISSIONS are relying on HTTP PUT. APPEND operations is based on HTTP POST, while DELETE is using HTTP DELETE. Authentication can be based on user.name query parameter (as part of the HTTP query string) or if security is turned on then it relies on Kerberos. The requirement for WebHDFS is that the client needs to have a direct connection to namenode and datanodes via the predefined ports. The standard URL format is as follows

<property> <name>dfs.webhdfs.enabled</name> <value>true</value> </property>

WebHDFS Advantages

- Calls are much quicker than a regular “hadoop fs” command. You can easily see the difference on cluster with Terabytes of data.

- If you have a non-java client which needs access to HDFS

Enable WebHDFS

- Enable WebHDFS in HDFS configuration file. (hdfs-site.xml)

- Set dfs.webhdfs.enabled as true.

- Restart HDFS daemons.

We can now access HDFS with the WebHDFS API using Curl call

Data Read and Write Process

An application adds data to HDFS by creating a new file and writing the data to it. After the file is closed, the bytes written cannot be altered or removed except that new data can be added to the file by reopening the file for append. HDFS implements a single-writer, multiple-reader model.

The HDFS client that opens a file for writing is granted a lease for the file; no other client can write to the file. The writing client periodically renews the lease by sending a heartbeat to the NameNode. When the file is closed, the lease is revoked. The lease duration is bound by a soft limit and a hard limit. Until the soft limit expires, the writer is certain of exclusive access to the file. If the soft limit expires and the client fails to close the file or renew the lease, another client can preempt the lease. If after the hard limit expires (one hour) and the client has failed to renew the lease, HDFS assumes that the client has quit and will automatically close the file on behalf of the writer, and recover the lease. The writer’s lease does not prevent other clients from reading the file; a file may have many concurrent readers.

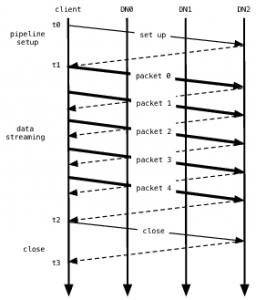

An HDFS file consists of blocks. When there is a need for a new block, the NameNode allocates a block with a unique block ID and determines a list of DataNodes to host replicas of the block. The DataNodes form a pipeline, the order of which minimizes the total network distance from the client to the last DataNode. Bytes are pushed to the pipeline as a sequence of packets. The bytes that an application writes first buffer at the client side. After a packet buffer is filled (typically 64 KB), the data are pushed to the pipeline. The next packet can be pushed to the pipeline before receiving the acknowledgment for the previous packets. The number of outstanding packets is limited by the outstanding packets window size of the client.

After data are written to an HDFS file, HDFS does not provide any guarantee that data are visible to a new reader until the file is closed. If a user application needs the visibility guarantee, it can explicitly call the hflush operation. Then the current packet is immediately pushed to the pipeline, and the hflush operation will wait until all DataNodes in the pipeline acknowledge the successful transmission of the packet. All data written before the hflush operation are then certain to be visible to readers.

Apply for Big Data and Hadoop Developer Certification

https://www.vskills.in/certification/certified-big-data-and-apache-hadoop-developer